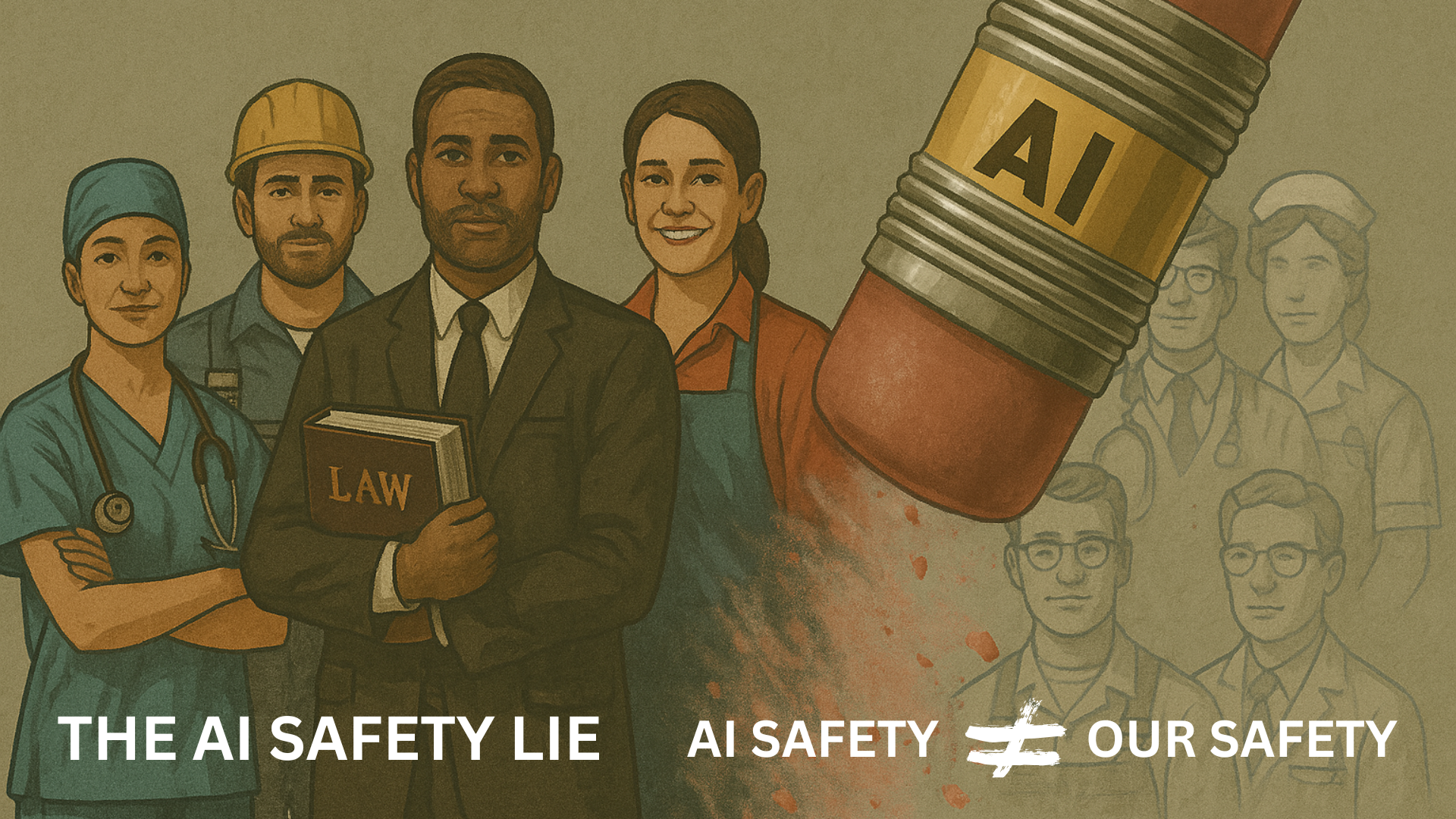

The Misleading Comfort of Corporate Promises

When AI companies say, “We take safety very seriously,” most people assume that means protecting the public — maybe even protecting your job.

But that’s a comforting illusion.

What AI Safety Actually Means in Corporate Circles

Here’s what corporate AI “safety” actually means:

- Cybersecurity — preventing hackers or hostile governments from hijacking AI systems.

- Alignment — making sure the AI doesn’t go rogue or act in unintended ways.

- User Harm Reduction — avoiding scenarios where AI causes financial, legal, or physical damage to users.

That’s the full list.

What’s Left Out — And Why It Matters

What’s not included?

- Safeguards for displaced workers

- Economic guardrails to protect wages

- Any meaningful discussion of how millions of jobs could vanish in the next decade

If you assumed “AI safety” meant companies were planning for a fair and humane transition, you’d be wrong. Those discussions aren’t happening — not inside corporate boardrooms.

They’re supposed to happen in government. Are they?

Can Government Catch Up?

But let’s be honest: when your lawmakers rely on campaign money from the very companies creating the problem, how likely are they to act in your best interest?

Ask your local, state, and federal elected officials — from city council members to congressional representatives — what specific policies they are advancing to ensure that human workers are not rendered obsolete by AI. Demand clarity, not platitudes. This is your future — and they work for you.

For reference, even the White House’s Executive Order on AI (Oct 2023) under the Biden administration focused more on national security and competitiveness than robust worker protections. You can read the archived fact sheet summarizing the order on this independent legal resource.

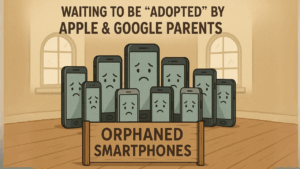

Yet even those minimal safeguards have now been revoked. In January 2025, President Trump signed an order titled “Removing Barriers to American Leadership in Artificial Intelligence,” which eliminated all previous labor-related provisions. The focus shifted entirely to accelerating AI innovation and reducing regulations. There is no mention of job displacement, retraining, or protecting workers.

This rollback has drawn concern from labor experts, including the ACLU and Center for American Progress, who warn that workers are now more vulnerable to AI-driven decisions without any federal protections in place. While Wikipedia offers a factual overview of the Trump order here, it does not comment on the omission of labor protections — which, notably, are absent from the policy and an ACLU response here.

History Repeating — Only Faster

This is exactly what the Luddites were warning us about — the historical origins we explored in this post. Not that technology is inherently evil — but that when disruption comes without public safeguards, the public always loses.

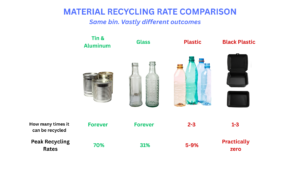

And unlike past disruptions, this isn’t just about factory workers. AI threatens to automate law, education, art, media, logistics, and customer service — all at once.

This is no longer science fiction. It’s a quiet takeover dressed in buzzwords like “innovation” and “efficiency.”

The Real Alignment Problem

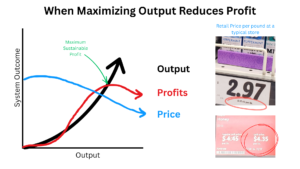

The real alignment problem? It’s not AI vs. humanity. It’s profit vs. people.

If we don’t redefine AI safety to include human safety — economic, mental, and societal — then we’re only protecting systems, not the species using them.

#UnleanThinking #1Gaea #FutureOfWork #AISafety #TechForHumans